|

Gyeongsu (Bob) Cho I am a Ph.D. student in the Artificial Intelligence Graduate School at UNIST, where I am a member of the 3D Vision & Robotics Lab under Prof. Kyungdon Joo's supervision. I received a B.Eng. in Mechanical Engineering from Chung-Ang University.

I am actively seeking internship opportunities at academic or industrial research teams. |

|

ResearchI am passionate about creating models that do not yet exist — models capable of reconstructing, animating, and generating dynamic 3D and 4D content, especially for animals and humans. My work combines synthetic data pipelines, parametric animal/human models, and generative methods to lift 2D images and videos into animatable 3D/4D representations. I enjoy building tools and models that unlock new creative and scientific possibilities in computer vision and graphics. |

|

In submission (CVPR) [paper] / [project page] We present a synthetic animal video pipeline and a video transformer model for reconstructing temporally coherent 4D animal motion and global trajectories from monocular in-the-wild videos. |

|

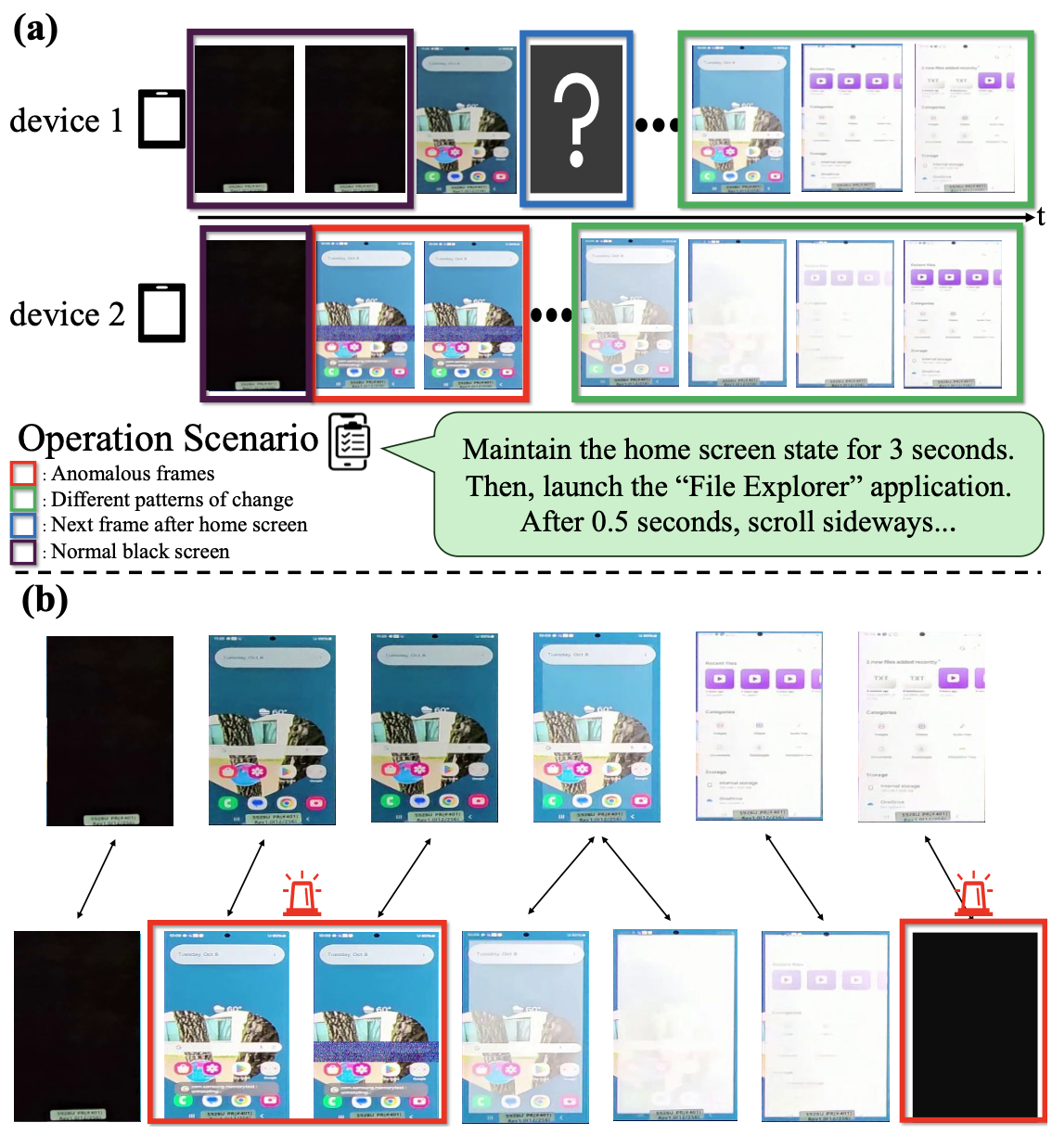

In submission (TCSVT) [paper] / [project page] We present a novel framework for video anomaly detection, where multiple devices are concurrently monitored by an external camera during operation. This work was done in collaboration with Samsung Electronics. |

|

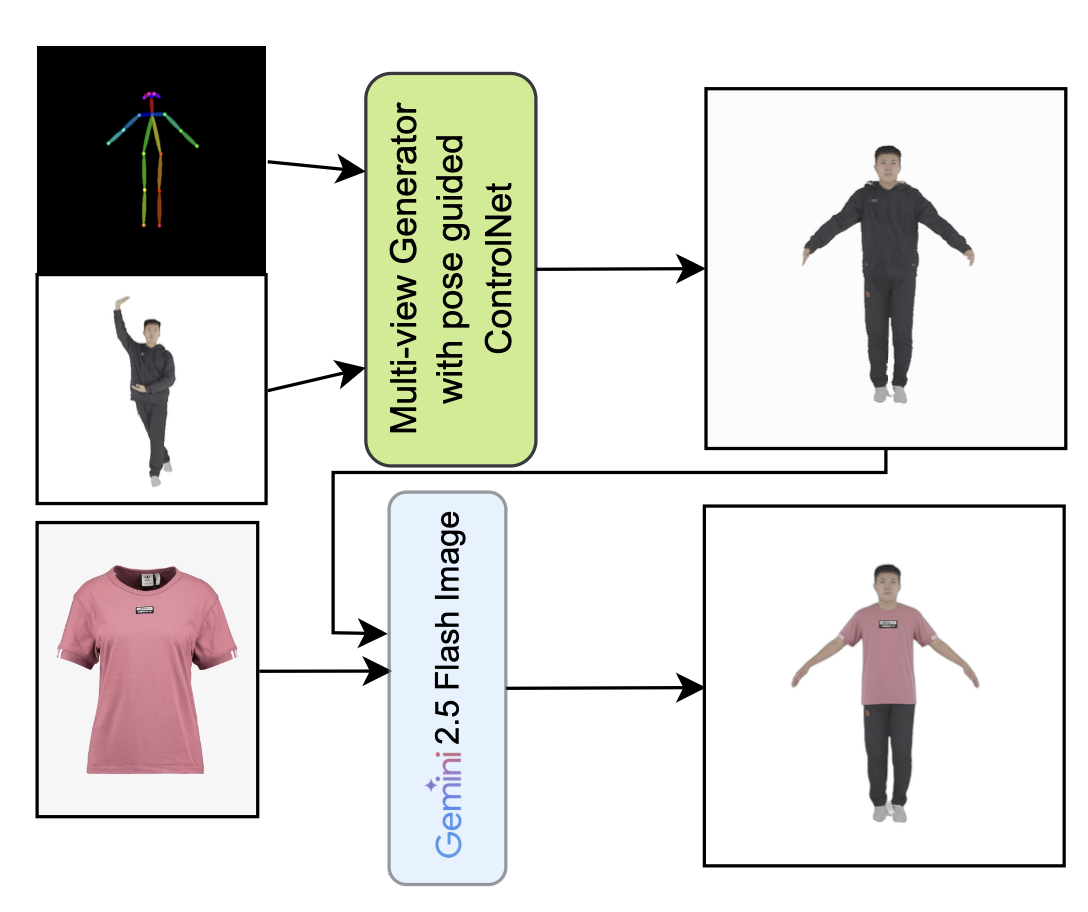

Seonghee Han*, Minchang Chung*, Gyeongsu Cho, Kyungdon Joo, Taehwan Kim WACV 2026 [paper] / [project page] This work generates pose-diverse, multi-view images for virtual try-on starting from a single frontal input. By leveraging multi-view consistency and pose conditioning, the method produces realistic, view-consistent outfits under large pose variations. |

|

Seonghee Han*, Minchang Chung*, Gyeongsu Cho, Kyungdon Joo, Taehwan Kim ICCV 2025, Wild3D Workshop [paper] / [project page] Avatar++ generates an animation-ready 3D human avatar from a single image in seconds. By combining identity-preserving features with pose-guided multi-view synthesis, the framework enables fast and controllable avatar creation for downstream animation and XR applications. |

|

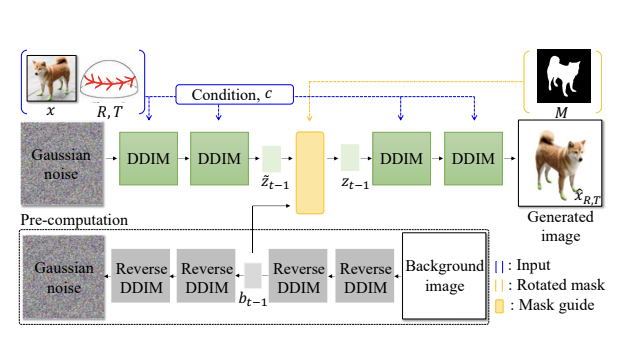

Gyeongsu Cho, Changwoo Kang, Donghyeon Soon, Kyungdon Joo International Journal of Computer Vision (IJCV), 2025 [paper] / [project page] DogRecon is a framework that reconstructs animatable 3D Gaussian dog models from a single RGB image. It leverages a canine prior and a reliable sampling strategy to obtain high-quality 3D shapes and realistic animations of dogs from in-the-wild photos. |

|

Gyeongsu Cho, Changwoo Kang, Donghyeon Soon, Kyungdon Joo CV4Animals Workshop @ CVPRW 2025 (Oral) [paper] / [oral video] This workshop version presents the initial DogRecon framework for animatable 3D Gaussian dog reconstruction from a single image, focusing on canine priors and practical reconstruction quality for in-the-wild photos. |

|

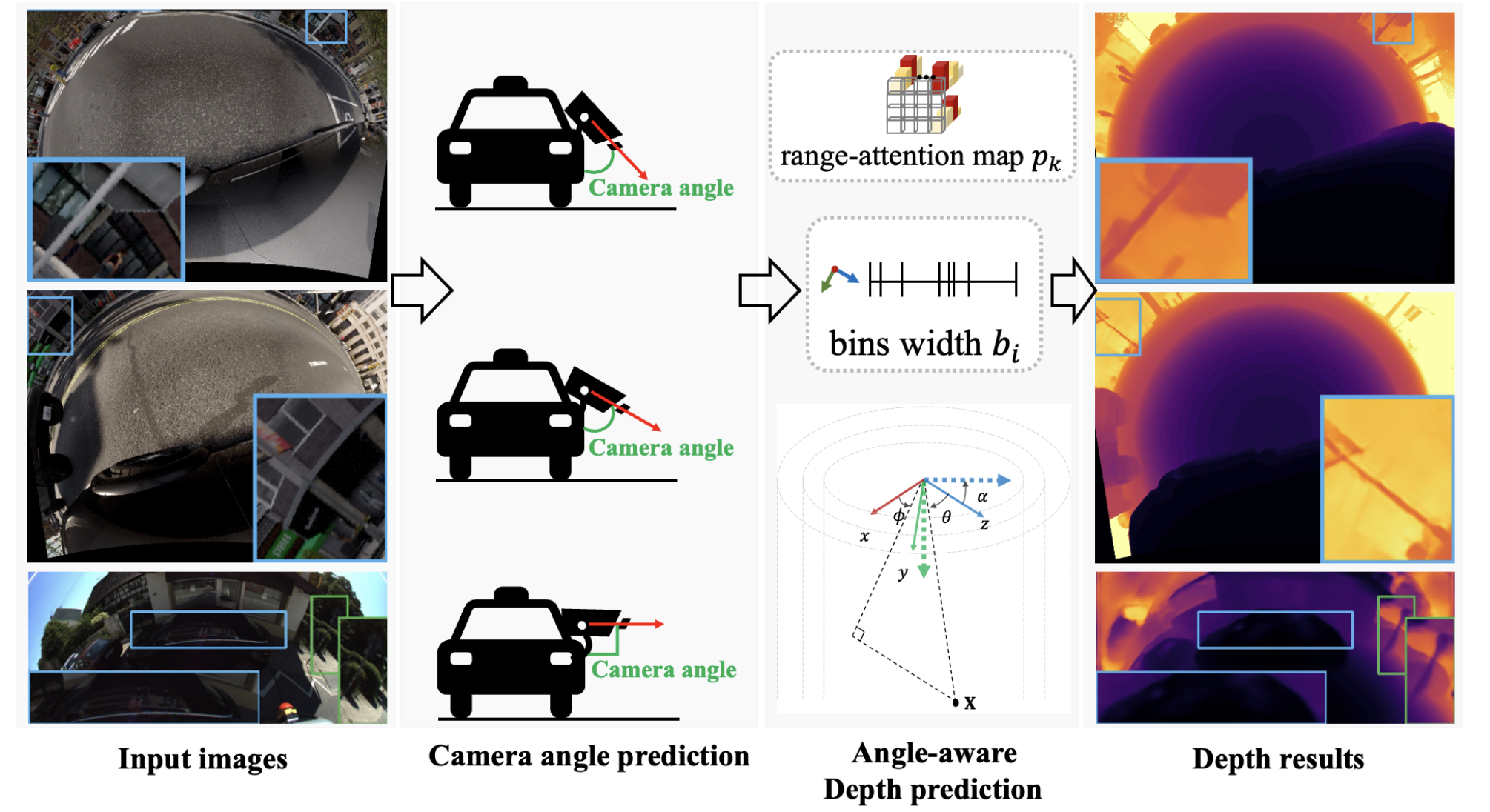

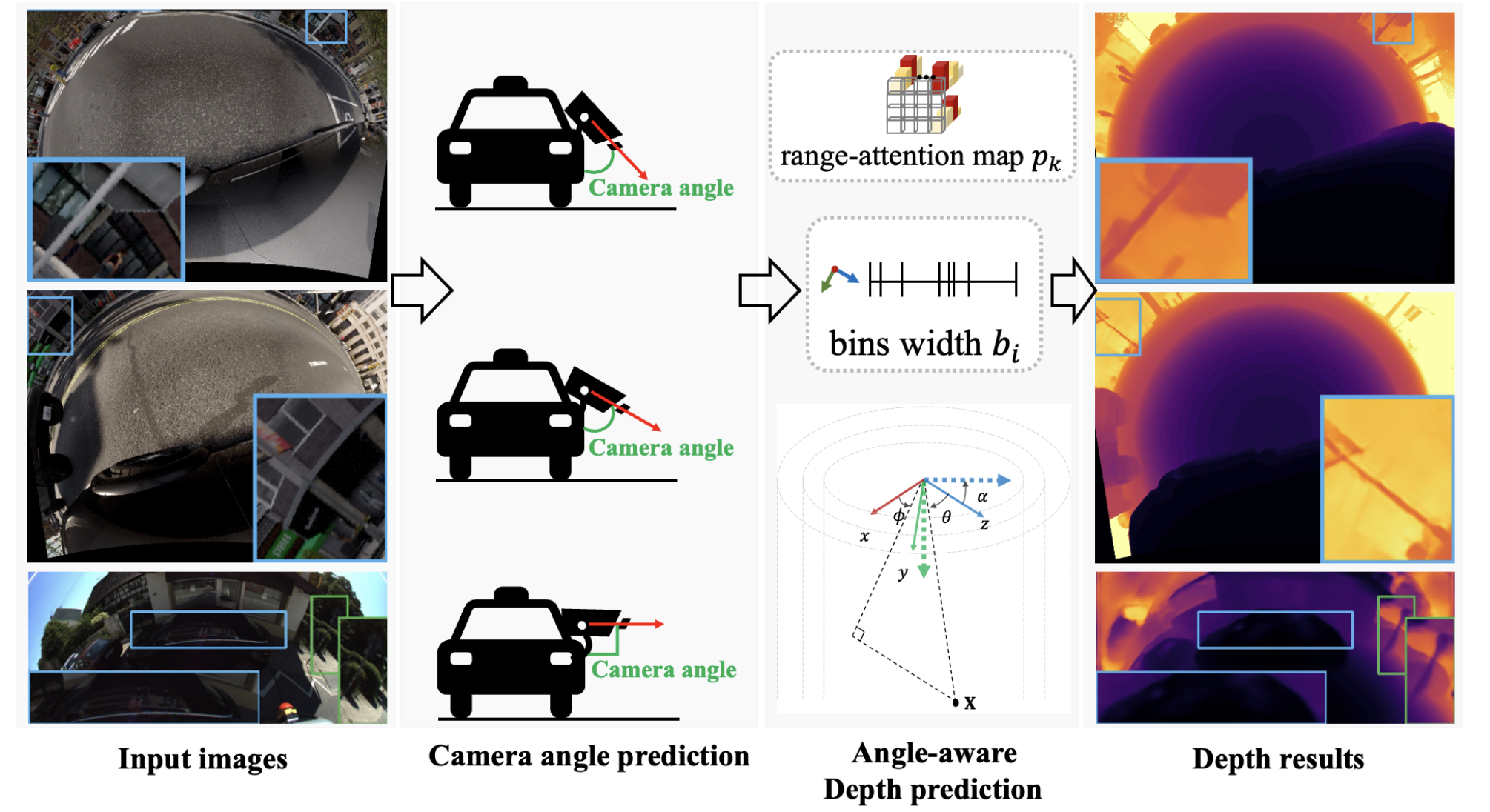

Jongsung Lee, Gyeongsu Cho*, Jeongin Park*, Kyongjun Kim*, Seongoh Lee*, Jung Hee Kim, Seong-Gyun Jeong, Kyungdon Joo ICCV 2023 [paper] / [project page] SlaBins introduces a slanted multi-cylindrical representation and adaptive depth bins to achieve accurate and dense depth estimation from automotive fisheye cameras in road environments. This work was done in collaboration with 42dot. |

Education

|

Research & Industry Experience

|

|

Template from this website. |